New POW calculation module #1284

No reviewers

Labels

No Label

bug

build

dependencies

developers

documentation

duplicate

enhancement

formatting

invalid

legal

mobile

obsolete

packaging

performance

protocol

question

refactoring

regression

security

test

translation

usability

wontfix

No Milestone

No project

No Assignees

1 Participants

Due Date

No due date set.

Dependencies

No dependencies set.

Reference: Bitmessage/PyBitmessage-2024-12-05#1284

Loading…

Reference in New Issue

Block a user

No description provided.

Delete Branch "Kleshni/POW"

Deleting a branch is permanent. Although the deleted branch may continue to exist for a short time before it actually gets removed, it CANNOT be undone in most cases. Continue?

For #1227. It's currently not connected to the PyBitmessage code, I'm going to get it done sooner or later.

It provides the

WorkProverthread class which manages tasks and schedules them to solvers. There are 4 possible solvers:Single-threaded

DumbSolverin Python. I optimised it so it now works ~2 times faster. In particular, I replacedhashlib.sha512withSHA512from OpenSSL. Since you need OpenSSL to assemble a message anyway, I think it's safe to use it in a fallback solver.Multiprocess based

ForkingSolverin Python. It works faster too, because it relies onDumbSolver.Multithreaded

FastSolverin C. I tried to utilize SIMD, but it gave very little speed up of 7 %, so it still calls OpenSSL.And

GPUSolverin OpenCL.The library was tested on a 4-core AMD64 Linux with integrated GPU and 45-CPU virtual machines with hyperthreading enabled:

bsd.mpkernel;I failed to install Hackintosh on QEMU, so somebody else has to test it there.

Have you tried frozen mode on Windows?

No, but

ForkingSolveris disabled in this case complying to the current behaviour. All file paths (to the C library and OpenCL kernel) are relative to the code directory, whose path must be provided by the calling code.@ -0,0 +1,42 @@Please keep this module independent from the outside code, so that it can be reused in other applications.In that case why can't we make it a separate package and import it where required ?

pass is the dangerous statement, please log it or please write a print statement atleast...

parallelism = self.solver.parallelism if self.solver else 0

if not name and not self.solverName

logging is required for this functionality.

if not self.solver

if not name

doc string required here

docstring here

pep8 validation

docstring here please

if not self.tasks

pep8 validation

@PeterSurda , I think we need optimization here.

@ -0,0 +90,4 @@self.currentTaskID = Nonedef notifyStatus(self):if self.statusUpdated is None:if not self.statusUpdated:

return

@ -0,0 +154,4 @@self.notifyStatus()def addTask(self, ID, headlessPayload, TTL, expiryTime, byteDifficulty, lengthExtension):add docs to the function.

@ -0,0 +223,4 @@task = self.tasks[self.currentTaskID]if task.expiryTime is None:if not task.expiryTime

@ -0,0 +234,4 @@appendedSeed = self.seed + struct.pack(">Q", self.roundsCounter)self.roundsCounter += 1try:pep8 validation

docs here and pep8 validation.

doc type required here

documentation required here

@ -0,0 +2,4 @@import os.pathimport platformimport subprocessimport sysplease write all the imports in alphabetic order

@ -0,0 +13,4 @@if platform.architecture()[0] == "64bit":suffix = "-64"pep8 validation

remove un necessary spaces

@ -0,0 +1,117 @@import multiprocessingimport ospep8 validation for the file

@ -0,0 +1,42 @@Please keep this module independent from the outside code, so that it can be reused in other applications.It's a Python package with the

__init__.pyfile, and it's intended to be imported likeimport workprover. It could be moved to a separate repository, but I think, it's easier and safer to keep it in the same file tree.self.availableSolversis visible to the outside code, so it can log or print iffastis missing. It should show a message in GUI status bar like the current code does.It's less readable.

@ -0,0 +90,4 @@self.currentTaskID = Nonedef notifyStatus(self):if self.statusUpdated is None:It's less acurate.

self.statusUpdatedmust be either a function or aNone. If it's 0 or an empty string, it would be a programming error and further call would rise an exception making the error noticable.It calls the

self.statusUpdatedcallback and the outside code should log this and display in the GUI.Seems to work for me: two "singleWorker" threads appear when doing PoW. Oh, I realized it's not connected so far :(

@Kleshni

workprover.testalso contains tabsSome tests are failing. It seems to be expected for

TestGPUSolver, what about the rest?TestSolveris not a test case, it's a base class for other test cases. Now it should be skipped during automatic test discovery.TestFastSolverfails loading compiledlibfastsolver.sofor the reason I don't know. A print in the correspondingexceptcould clarify this.Strange thing:

It seems to be specific to ubuntu:trusty or Travis-CI.

Nevertheless could you please add

pybitmessage.workprovertopackagesand decorateTestGPUSolverlike I did?I have registered in Travis-CI and debugged the issue. It was a very stupid mistake, shared libraries like

-lpthreadand-lcryptoshould be listed after object files in the linker command line.Travis-CI also gives an OS X environment, so I tested the module there.

packages/pyinstaller/bitmessagemain.specneeds updating.Could you please comment #1283 and maybe change the import order too if you agree?

Could you please also add the extension and a workaround for dh_python in Debian: a9955e5?

Maybe just invoke the makefile from

setup.py?The extension approach is more pythonic and there is no need for makefile. You can see it even in the tests log.

But there rises up a need for a workaround, while the makefile is needed anyway. And a pythonic extention is not a

ctypeslibrary, it must use Python C API to be directly importable:Imports should be placed on the top of the file with a alphabetic order.

After that from statements should be written in the alphabetic order

@ -13,2 +13,4 @@import socketimport threadingimport timefrom binascii import hexlify, unhexlifyPlease place all the imports in a order

better to place log instead of exception pass.

I hope it will be dangerous if we have pass statement.

Place this imports in the starting of the script.

Are you going to connect new POW code soon? This PR still not includes the actual use of this code for doing work.

Yes, I'm rewriting the

class_singleWorkermodule.I almost finished rewriting

class_singleWorker, but I have a problem with the function for requesting pubkeys. It uses theretrynumbercolumn in the database for detemining the TTL and rising it exponentially for every next attempt.But proper implementation of this scheme gets very complicated if two or more messages need the same pubkey and have different retry numbers. It would be wise to not send two simultaneous getpubkey requests in this case, and to not send it at all if it's already present in the inventory. So there emerges a question on how to handle the

retrynumbercolumn in such situations, and what to do with it when some other user sends a getpubkey request for a key we also need.I think we can get rid of this column, I mean use it only for resending the message itself but ignore it for getpubkeys. This would simplify the algorithm: just check there is no requests in the inventory, and send it with a fixed TTL.

The comment near the line calculating the TTL says, that the 2,5-day period was chosen "fairly arbitrarily". Maybe redefine it to the maximum 28 days? Getpubkey requests are negligibly small and are sent with the default network difficulty, they don't need too much work.

There are currently 13661 getpubkeys in the inventory, but only 84 of them have different tags! The others are duplicates, which I propose to avoid by simplifying the sending algorithm. And there are 40939 different pubkeys, so I conclude that the need for getpubkeys is very small, and there is no big reason to save on short TTLs.

Less frequent resending and not repeating already existing requests can also affect privacy in the positive way.

(Another question is that the current requesting function specifies the object type as 0 in one place and 1 in another, but I think it's certainly a bug.)

@Kleshni can I have the functional flow also along with the PR, it will be easy to test the internal things please.

If possible can you attach a doc please with some snapshots.

Sorry, I don't know what the "functional flow" is. I tried to google, but it seems, that I have to learn much new material to satisfy your request.

Snapshots of what?

Maybe I should add doc strings to functions or something like that?

I don't completely understand why the TTL randomization is needed:

Possibly, it's supposed to hide the origin node of the message because it makes neighbour nodes unable to tell whether the message was generated right now or its retranslation took some time.

But it seems very weak:

Maybe it could hide singular messages, but if a node generates messages continuously (like the time service broadcast), the expected value of the random addition approaches -0,5 - a constant.

An attacker can guess the original TTL, which is usually a multiple of 3600 seconds. If the expiry time of a received object is

currentTime + originalTTL + 299, then it's obvious, that the random addition was 299 seconds, it can't be more. In this case it hides nothing.TTL affects POW difficulty, POW difficulty affects the nonce value, the nonce value is known to everybody. It's another way to leak the real sending time.

I don't know how to solve the first two problems, but the the last can be solved by randomizing the expiry time instead:

So I propose to change the code this way.

I deleted the file

bitmessageqt/settings.py. It was originally generated by thepyuic4utility frombitmessageqt/settings.uiand was not actually needed, because*.uifiles can be used directly without conversion to*.py.The generated files must remain untouched because they need a regeneration on every change to the source files, but

settings.pywas manually modified one day. This prevented easy modification of the settings window, so I solved this by moving the changes out from this file and deleting it.The same for the main window.

@ -56,3 +51,3 @@from statusbar import BMStatusBarfrom network.asyncore_pollchoose import set_ratesimport soundimport replease write imports in the starting point of the file in the alphabetic order

can't we make it dynamic or iterable?

We just need to delete these splitters.

Added a right-click menu option to cancel a message or broadcast. "Move to Trash" now also cancels POW before deleting.

@ -52,8 +52,8 @@ else:arch=64PEP8 validation required here.

please add doc string to the class.

please add doc string to the class

please write all the imports on the starting of the file.

Please place all the imports in the starting the file...

@ -37,1 +37,4 @@def resendStaleMessages():staleMessages = sqlQuery("""SELECT "toaddress", "ackdata", "status" FROM "sent"Pep8 validation here.

@ -161,0 +195,4 @@stream, readLength = addresses.decodeVarint(payload[readPosition: readPosition + 9])readPosition += readLengthtag = buffer(payload[readPosition: readPosition + 32]) # May be shorter than 32 bytes for getpubkeyspep8 validation here.

if not name and not self.solverName:

pass

if self.solver:

….

if name:

….

@ -0,0 +1,262 @@import Queueplease place all the imports in a alphabetic order.

These imports must be conditional.

Added API methods for dealing with raw objects in the inventory, solving #1225 and superseding #1226.

disseminateRawObjectArgument: a hex-encoded object without packet headers, starting from nonce.

Tries to send the object to the network. POW must be already calculated.

Returns an error or the object's inventory hash.

getRawObjectArgument: an inventory hash.

Returns an error or a JSON object with the following fields:

hash- the inventory hash;expiryTime- a UNIX timestamp;objectType- an integer;stream;tag- hex-encoded object tag;payload- hex-encoded object without packet headers, starting from nonce.listRawObjectsParameters: the desired object type or

None, stream number orNone, hex-encoded tag orNone.Returns a list of hex-encoded hashes.

queueRawObjectArguments: TTL in seconds and a hex-encoded object without packet headers, nonce and expiry time, starting from object type.

Queues the object for POW calculation and sending to the network.

Returns a unique handle to track the objects' state.

cancelQueuedRawObjectArgument: a handle returned from the

queueRawObjectmethod.Tries to cancel a previously queued object.

checkQueuedRawObjectArgument: a handle returned from the

queueRawObjectmethod.Returns an array with the first element being the current status of the object:

queued;doingwork;failedfor invalid objects;sent. In this case the second item of the array is the hex-encoded inventory hash;canceled;notfoundfor wrong handles and for handles that previously returnedfailed,sentorcanceled.If a queued object enters the

notfoundstate, it can mean that the daemon was restarted, because the queued objects are not saved to the disk.I deleted the

getMessageDataByDestination{Hash,Tag}methods because they were undocumented and didn't work at all, so I concluded that no-one ever used them. They are now replaced bygetRawObjectandlistRawObjects.The same for

disseminatePreEncryptedMsganddisseminatePubkey.undefined name 'GPUSolverError'

I got 3 additional threads (

workprover.WorkProverinstances) which don't stop when I runsrc/pybitmessage.py -t:https://travis-ci.org/g1itch/PyBitmessage/builds/411231409

This patch helped:

Any plans on merging?

your POW branch works nicely here ! great job !

@Kleshni I haven't looked at it yet, but it first needs to pass all the code quality checks and all commits need to be signed (you can squash commits if it helps).

However, others gave good feedback so chances are good.

Also, please split this into multiple patches. The PoW, the API and the other changes should be separate. You'll have better chances of getting it merged that way too.

@Kleshni would it be ok if I assigned someone else to the task to help you with cleaning it up? You'd have to give them write access to your repo.

OK.

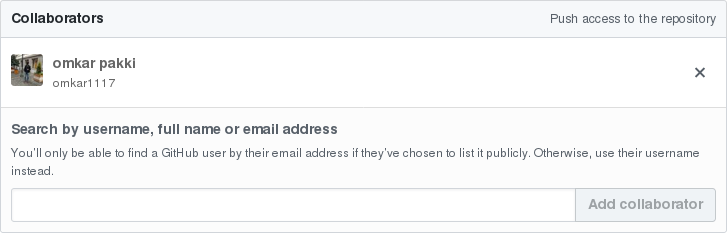

@Kleshni please give @omkar1117 write access to the POW branch. this way your commits will be preserved and you can still get paid through tip4commit.

I have sent him an invite yesterday but forgot to notify you 👀

@Kleshni could you please resolve the conflicts please.

@Kleshni Omkar says he doesn't have write access. He could fork it into his own repo, but since this PR is already open I would prefer to continue this way. Can you check and let me know?

This is what I see in the repository settings: